What is regularization in plain english? - Cross Validated

Is regularization really ever used to reduce underfitting? In my experience, regularization is applied on a complex/sensitive model to reduce complexity/sensitvity, but never on a simple/insensitive model to …

L1 & L2 double role in Regularization and Cost functions?

Mar 19, 2023 · Regularization is a way of sacrificing the training loss value in order to improve some other facet of performance, a major example being to sacrifice the in-sample fit of a machine learning …

What are Regularities and Regularization? - Cross Validated

Is regularization a way to ensure regularity? i.e. capturing regularities? Why do ensembling methods like dropout, normalization methods all claim to be doing regularization?

When to use regularization methods for regression?

Jul 24, 2017 · In what circumstances should one consider using regularization methods (ridge, lasso or least angles regression) instead of OLS? In case this helps steer the discussion, my main interest is …

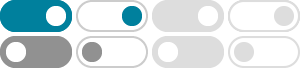

When will L1 regularization work better than L2 and vice versa?

Nov 29, 2015 · Note: I know that L1 has feature selection property. I am trying to understand which one to choose when feature selection is completely irrelevant. How to decide which regularization (L1 or …

How does regularization reduce overfitting? - Cross Validated

Mar 13, 2015 · A common way to reduce overfitting in a machine learning algorithm is to use a regularization term that penalizes large weights (L2) or non-sparse weights (L1) etc. How can such …

what does regularization mean in xgboost (tree)

Feb 17, 2019 · In xgboost (xgbtree), gamma is the tunning parameter to control the regularization. I understand what regularization means in xgblinear and logistic regression, but in the context of tree …

Why do we only see $L_1$ and $L_2$ regularization but not other norms?

Mar 27, 2017 · The intuition behind regularization is that I have some vector, and I would like that vector to be "small" in some sense. How do you describe a vector's size? Well, you have choices: Do you …

Why is the L2 regularization equivalent to Gaussian prior?

Dec 13, 2019 · I keep reading this and intuitively I can see this but how does one go from L2 regularization to saying that this is a Gaussian Prior analytically? Same goes for saying L1 is …

What is the meaning of regularization path in LASSO or related sparsity ...

If we select different values of the parameter λ λ, we could obtain solutions with different sparsity levels. Does it mean the regularization path is how to select the coordinate that could get faster …